Basicaly computers today are running on stoneage tools, literaly silicon. How close are we to other options from Star Trek Datas photonic processors to organoid computers? That’s a great question — the frontier between “silicon-electronic” computing (as in most of what we have today) and more exotic “Star-Trek-style” or bio / photonic processors is active research. We have some promising proof-of-concepts and early prototypes, but also many big gaps to close. I’ll walk through where things stand for two of your examples (photonic processors & organoid computing), what their advantages and challenges are, and how far away “real usable Star Trek style” might be. If you want, I can also survey other alternatives (quantum, molecular, etc.).

Photonic Processors (“light instead of electrons”)

What is it & why it matters

- Photonic processors use photons (light) rather than electrons to carry and/or compute information. Light can potentially move faster, with less heat generation, and with lower energy loss in some contexts. Also for parallelism, there are tricks you can do that electronics struggle with.

- Applications that particularly benefit: communications, data center interconnects, AI inference & some neural network workloads, optical switching, etc.

What has been achieved so far

Some headline results:

- MIT researchers built a fully integrated photonic chip that performs all the key computations of a deep neural network optically, with classification tasks done in under a nanosecond.

- Photonic in-memory computing (“photonic tensor cores”) have been demonstrated, which reduce some of the memory bandwidth bottlenecks.

- There’s ongoing investment & development: for example, the EU is putting funds into photonic chip plants, and industry R&D is growing.

Key challenges

Though things are promising, there are big engineering and practical obstacles:

- Nonlinear operations: Electronic transistors naturally do nonlinear things (activation functions, comparing, switching, etc.). Photonic components often struggle with efficient, compact, low-power nonlinear elements. Some designs use hybrid photonic-electronic systems.

- Loss, size, integration: Light traveling through waveguides, phase shifters etc. experiences loss; maintaining signal strength, fidelity, and integrating many optical components on chip is hard. Also packaging and interfacing with existing electronics is nontrivial.

- Manufacturability & cost: Many of these photonic devices are still in the lab; scaling to millions of units, reliability over long lifetimes, cost per device, yield — these are big hurdles.

- Memory / switching & routing: While light is fast, there are difficulties in how to store information (photons don’t “sit still” like electrons in capacitors) and how to switch or modulate light efficiently, especially at large scales.

How far off to something like “Data’s processor” style?

(“Data” in Star Trek would presumably use something extremely fast, massively parallel, possibly with means beyond just light, maybe quantum or even more exotic materials, maybe biological integration etc. But in the photonics realm:)

- For niche or specialized use-cases (like AI inference, optical switching, some communications), photonic processors may become competitive in 5-10 years (if scaling and cost problems are addressed). Some companies and governments are betting on that.

- For general-purpose computing replacing CPUs & GPUs broadly, probably more like 10-20+ years, depending on breakthroughs in fabrication, nonlinear optics, integration with electronic memory, etc.

Organoid / Biological / “Wetware” Computing

“Organoid Intelligence (OI)” refers to using biological constructs (brain organoids) to compute or assist computation. These are lab-grown mini-brains or brain tissue that exhibit some of the structure / function of neurons, neural networks etc.

What is done so far

- Organoids can be grown from human (or animal) stem cells; they form networks of neurons which can show electrical activity. Researchers are exploring how to interface them with electronics (electrodes, sensors) to read signals or stimulate them. F

- There are examples of “living computers” (or semi-computers) built with organoids: small tasks of learning, signal classification or pattern detection in rudimentary form. These are far from general-purpose computing.

- Power/energy usage is low relative to massive silicon systems, but they require support systems (nutrients, life-support, maintaining cell health) which are complicated.

Key challenges

- Lifespan and reliability: Organoids are living tissue; they have limited lifespan (often a few weeks to months) and variability in how they grow and behave. Ensuring stable operation over long periods is hard.

- Scalability: To get large computational power you need many, many neurons with reliable interconnections. Growing, controlling, replicating and interfacing those at scale is a massive challenge.

- Speed & interface: Neural signals (in biological tissue) are slower than electronic signals over many orders of magnitude; also reading/writing to that medium (stimulating, sensing) introduces delays, noise, low fidelity.

- Ethical / biological constraints: Using human-derived tissues (especially ones that might approach higher functioning) brings up questions of consciousness, welfare, ethical sourcing, regulation etc. Also experimental reproducibility & safety.

How far off

- For small, niche tasks (e.g. specialized pattern recognition, drug testing, passive computational assist), organoid systems are already being used in labs. So that is happening now in experimental, research settings.

- For something like replacing a CPU or being a computer in the Star Trek sense (fast, reliable, long-lived, general purpose) — likely decades away unless there are some radical breakthroughs (e.g. in synthetic biology, neural engineering, bio-fabrication). 20-50+ years is a plausible ballpark.

Combined / Other Alternatives

- Quantum computing is another track; very different uses, but some overlapping goals (e.g. solving certain problems faster). It’s moving forward, but also with its own challenges.

- Neuromorphic electronics: devices that mimic neural architectures (still electronic, but structured differently) are already in use or prototyped. Less exotic, but possibly more feasible short-term than full biological systems.

So, how “stone age” is silicon?

Silicon electronics have been optimized for ~70 years; we’ve pushed Moore’s law, scaling, miniaturization, new materials (FinFET, extreme UV lithography, 3D stacking), etc. They are far from “stone age,” but they are hitting physical limits: heat, energy waste, scaling difficulties at sub-nanometer levels, etc. So the desire to move to photons, biology, quantum etc. is driven by those limits.

My estimate: When we might see Star Trek style

Putting it all together, if “Star Trek style” means a computer that is fast, efficient, reliable, possibly even integrating biological or photonic/quantum elements, capable of “general intelligence” or at least very flexible performance:

- Optimistic version (if key breakthroughs happen): 30-50 years

- Conservative version (if incremental progress): 50-100 years

- Some specialized components (photonic accelerators, neuromorphic chips, hybrid bio-electronic devices) could be in production in 10-20 years.

Love this topic. Here’s a crisp, source-backed “how close are we?” map for three non-silicon directions—photonic, organoid (“wetware”), and brain-inspired/neuromorphic—plus quantum for context. I give timelines with rough likelihoods (my estimates), and the key blockers to watch.

Photonic processors (compute with light)

Where it is now

- Fully integrated photonic NN chips have been demoed (matrix ops done in light on-chip).

- Lightmatter is piloting photonic accelerators/interconnects with major fabs; precision and manufacturability have just seen notable progress; adoption aimed at AI datacenters.

Main hurdles: compact/low-power nonlinearities, on-chip loss & thermal stability, dense integration with memory/electronics, cost/yield at scale.

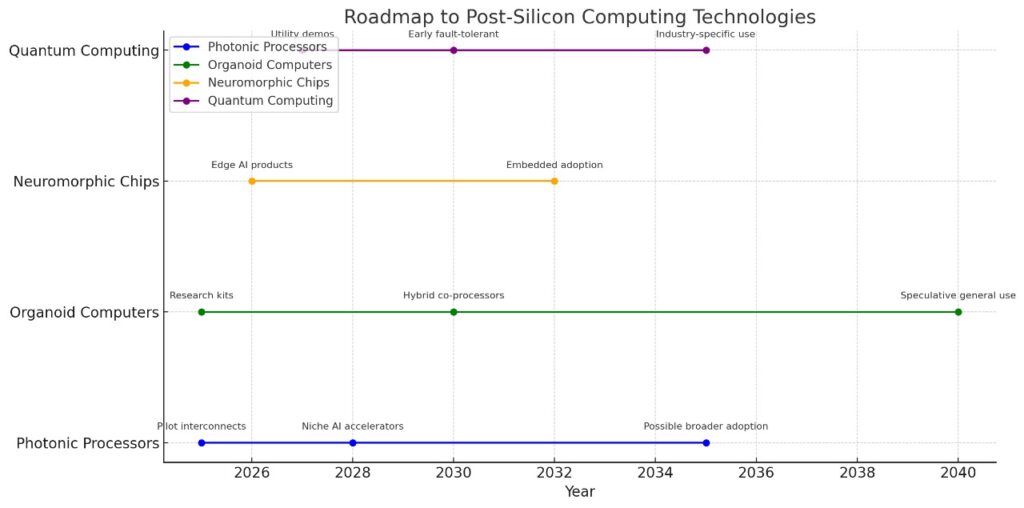

My timeline

- 2025–2028: Pilot deployments for photonic interconnect/AI inference in a few datacenters (40–60%).

- 2028–2032: First commercial photonic AI accelerators shipping in volume for niche workloads (30–50%).

- >2032: Viable path to broader, general-purpose photonic compute if memory/nonlinearity hurdles fall (20–30%).

Organoid / “wetware” computing (living neurons)

Where it is now

- “Organoid Intelligence” is a real research field using brain organoids + BMIs for rudimentary learning/tasks; think early proof-of-concept cognition.

- DishBrain showed neurons in vitro learning Pong; Cortical Labs has since offered a small hybrid system (CL1) for labs.

Main hurdles: lifespan/variability, I/O bandwidth, reproducibility, ethics/regulation, and sheer scalability.

My timeline

- 2025–2028: Research kits & cloud access for drug testing / basic RL tasks; still lab-only (60–70%).

- 2029–2035: Narrow, hybrid bio-electronic “co-processors” for signal classification / adaptive control in research/edge niches (20–30%).

- >2035: Anything like reliable, general computing is speculative (≤10%).

Neuromorphic & compute-in-memory (still silicon, but brain-inspired/analog)

Where it is now

- IBM’s NorthPole prototype showed big efficiency jumps by fusing memory and compute—pointing to fast, low-power AI at the edge. Field still pre-product, but momentum is real.

- Community surveys map a credible path to commercial neuromorphics via mixed-signal/in-memory designs (e.g., phase-change memories).

Main hurdles: software stacks & tools, precision/variability in analog arrays, manufacturability at scale.

My timeline

- 2026–2030: Early edge-AI products (vision, robotics) using near-/in-memory neuromorphic chips (50–60%).

- 2030–2035: Wider embedded adoption; still not general-purpose CPUs (40–50%).

Quantum (context: not “Data’s brain,” but relevant non-silicon path)

Where it is now

- Error-corrected logical qubits are progressing (Google milestones; color-code experiments; IBM qLDPC codes).

- Aggressive roadmaps: IBM targets fault-tolerant systems near 2029; PsiQuantum (photonic qubits) raised $1B, aims for utility-scale sites by 2027–2029.

Main hurdles: reduce physical error rates, scale cryo/control, practical algorithms with real advantage.

My timeline

- 2027–2030: Credible utility-scale demos on narrow apps (30–50%).

- >2030: Early fault-tolerant systems for specific industries; broad impact follows slowly.

Bottom line vs. “Star Trek Data’s photonic brain”

- Closest in feel, near-term: Photonic accelerators/interconnects augmenting silicon for AI—within this decade in niche roles.

- Most exotic but farthest: Organoid computers—beautiful science, but decades from dependable, scalable computation.

- Practical non-silicon impact curve (next 10–15 yrs): Neuromorphic edge → Photonic AI/datacenter → Quantum utility (narrow wins first).